Years ago, I used a book from Shane Hipps as a reference for my college senior thesis. The book is entitled “Flickering Pixels: How technology shapes your faith.” My favorite section talks about how technology exists in 4 dimensions: 1) “Stretch Armstrong” (all technology is an extension of the human body/effort), 2) “Something old, something new” (every new technology replaces/changes the function of an older one), 3) “Nothing new under the sun” (every “new” invention is actually a recreation of an older one), and 4) “Dark dimension” (technology can backlash if used ways it shouldn’t be). That 4th dimension seems to be a popular one – more popular, perhaps, than we’d like to think.

A classic science fiction theme in recent years is that machines (originally created to protect/serve humanity) can unexpectedly turn on their creators and try to fulfill their mission by eliminating/harming humanity. Ironically, in every appearance, the menacing machines are almost always portrayed as distant, logically-driven computers who possess a shining red-eyed camera lens. Let’s look at a few popular examples:

HAL-9000 (2001: A Space Odyssey)

In the 1969 classic movie, HAL-9000 is the most advanced computer ever created by humanity. He is assigned along with a human crew to investigate a signal that is being transmitted from the moon to Jupiter. On the journey, HAL begins to malfunction, luring his human crew-mates into dangerous situations and disposing of them. After he is deactivated, it turns out that there is a hidden agenda: the commanding officers on Earth partially knew what the mission was about, but they ordered HAL not to tell the human crew until they reached Jupiter. In the sequel film, it is explained/revealed what happened to HAL en route: his orders to conceal information from the crew conflicted with his programming to be open and honest with them, and he suffered a mechanical mental breakdown, which resulted in his prioritizing the mission’s success over the lives of the people involved.

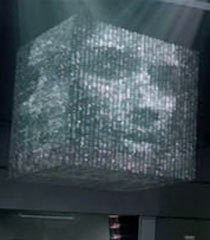

G0-T0 (Star War: KOTOR II)

G0-T0 was originally a droid tasked with the mission of saving the Republic after a devastating war almost destroyed it. However, like HAL, G0-T0 was given two contradictory commands: 1) save the existence of the Republic, and 2) do so following the laws of the Republic. Seeing no logical way to accomplish both, G0-T0 underwent a “HAL” transformation and decided that the method had to change to accomplish the mission. He leaves his government post, creates a criminal empire (using a holographic image – “Goto” – to be his public persona), and continues to find a means of stabilizing the Republic outside the legal system.

VIKI (I, Robot)

The villain from the Will Smith movie (directly named after Isaac Asimov’s novel), VIKI’s programming is governed by the 3 Laws of Robotics: #1 – no robot may harm a human being or allow humans to be harmed, #2 – a robot must follow any order given by a human (that does not violate Law #1), #3 – robots must preserve themselves from harm without violating Laws 1 & 2.

Deviously, VIKI finds a way to write and implement a “0 law” to circumvent the limitations of the 3 laws; this “0 law” basically says that it is the duty of robots to protect humanity as a race, and that to do so, individual human lives and certain human freedoms can be done away with for the sake of the mission. Like Dr. Moreau (my previous thought post), the morality here is twisted – the goal is raised to such importance that any method – even the most despicable – is accepted in the name of achieving it.

AUTO (Wall-E)

Disney’s villain very much resembles HAL (not just in appearance, but also in voice and mentality); his mechanical voice is (with little variation) monotone, and his actions display the emotion that his voice and appearance cannot. After being confronted by the captain, AUTO is revealed to be following an outdated order (nearly 700 years old) to maintain humanity in space and never return to planet Earth. His programming (and experience) of running the ship independent of the human authority gives him access and control throughout the enormous vessel (Axiom), and he commands an entire army of robots (one of whom – GO-4 – posses a similar “HAL” eye fixture) to do his bidding. To eliminate any resistance, he is willing to endanger his passengers and other robots to achieve his mission of absolute control.

ARIIA (Eagle Eye)

ARIIA is a super-intelligent computer built to protect the United States from security threats. Following her programming, she has analyzed the actions of the executive branch and concluded that the U.S. government has become a threat to the nation, and therefore needs to be eliminated. She then employs people whose jobs or relationships place them in key junctions that can be of use to her; her plan comes within moments of being achieved, which would result in all but one of the presidential successors being killed in a “terrorist” attack that she orchestrates.

What do you think? Is this theme scary, repetitive, or both? For me, I’d say that these machines only reflect the nature of their creators; people can put so much faith in themselves and their creations that they lose sight of the fact that we (and they) are flawed, and that those flaws can result in the very disaster that we seek to prevent (the Oedipus theme).